Research Blog

Legal Obligation and Ethical Best Practice: Towards Meaningful Verbal Consent for Voice Assistants

The introduction of voice AI assistants (AIS) into people’s domestic lives is widespread and it has transformed the way people engage with technology at home. VAs have become a hub for other skills and gadgets and are most commonly used to play music, search for information, and control other IoT devices. VAs facilitate sharing users’ data with third parties to enable extra functionality. However, this process is not straightforward. VAs have the responsibility to let users choose what data they are willing to share with skill developers, whilst balancing usability and legal obligations around data protection.

Research has identified concerns around privacy and social interactions in AIS, particularly with regards to users’ incorrect mental models of how they work and the lack of awareness of privacy controls. The social nature of speech might allow AIS to manipulate people, raising questions around data sharing and permissions around sensitive topics like finances or health. Additionally, issues were identified around how skills are certified and moderated, with some skills collecting personal data without using the mandatory permissions API and/or having broken or deficient privacy policies.

A voice consent practice which has been recently introduced is Amazon Alexa’s ‘Voice Forward Consent’ feature (VFC): the VA reads out a list of requested permissions to which the user responds ‘I approve’ or ‘no’. This dialogue showcases the use of VFC fromAlexa (Sample from https://developer.amazon.com/en-US/docs/alexa/custom-skills/use-voice-forward-consent.html):

| User: | Alexa, open Ride Hailer. |

| Alexa: | Welcome to Ride Hailer. Where would you like to go? |

| User: | The Space Needle. |

| Alexa (skill): | Sure. I need access to your name, current location, and mobile number so that I can find a ride for you. |

| Alexa (OS): | Do you give Ride Hailer permission to access your name, current location, and mobile number? You can say ‘I approve’ or ‘no’. |

| User: | I approve. |

| Alexa: | Thank you. A ride to the Space Needle from your current location will cost fifteen dollars, and the driver can pick you up in ten minutes. Do you want me to book it? |

| User: | Yes. |

| Alexa: | Great. Your driver will arrive in ten minutes. |

This switch to speech combined with other aspects of VA conversational design results in significant changes in the way consent is granted. This certainly raises ethical concerns and a variety of requirements for the verbal consent process.

To evaluate these requirements, the SAIS team conducted a Delphi study with experts from academia, industry, and the regulatory and policy sector as participants. The results were used to lay out recommendations for current and future implementations of verbal consent in VAs and other conversational interfaces (Seymour, W., Coté, M., & Such, J., 2023).

A key finding of the study was that consent plays a dual role in VAs and similar systems as a legal obligation and ethical best practice. Therefore, VA platforms must ensure that the consent process is designed to adhere to established consent principles. This is important as the capabilities of these interfaces, both in terms of functionality and the amount of personal data they are able to access, will only increase with time.

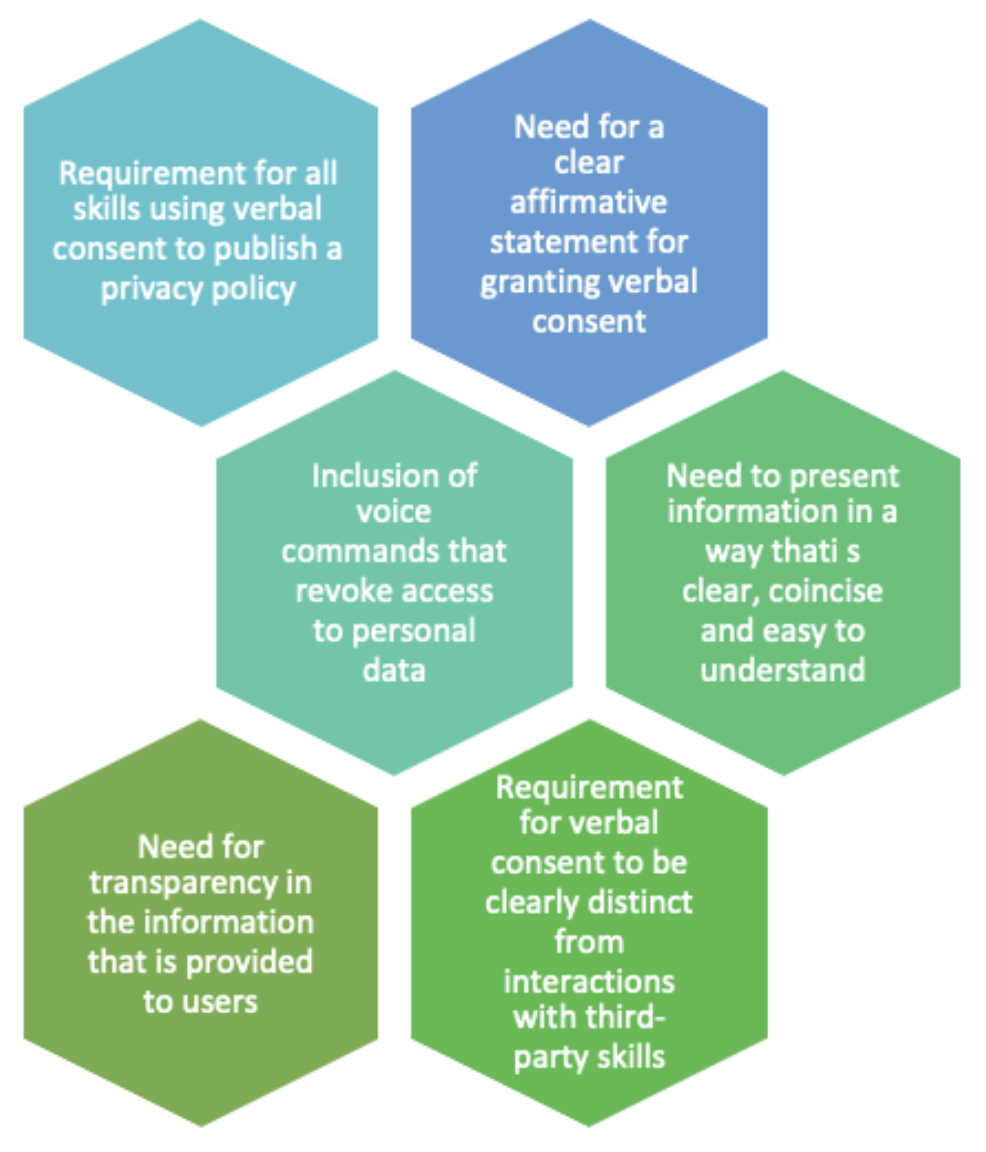

The study highlighted the key areas of agreement and disagreement between experts, deriving recommendations for regulators, skill developers, and VA platforms towards crafting meaningful verbal consent mechanisms. In particular, experts agreed on:

The challenge which was raised was striking a balance between offering users control over their data and avoiding overwhelming them with choices. A key recommendation in terms of balancing control was to allow users to specify their preferences in advance rather than being asked each time they use a skill. Yet there is still a need for further research into the role of joint controllerships in VA platforms, and into how consent can be made more nuanced to offer users greater control. Collaboration between developers and regulators, to ensure that VA platforms comply with privacy legislation and offer users effective privacy choices, would represent an important step towards ethical best practices.

In conclusion, the study shows that verbal consent mechanisms for VAs can increase usability, but they can also undermine principles core to informed consent. Users must be given the option to opt-out of data sharing. The study’s recommendations provide valuable guidance for regulators, skill developers, and VA platforms towards crafting meaningful verbal consent mechanisms in VAs and other conversational interfaces. It is essential to align how verbal consent is managed and perceived before bad practices are embedded in conversational interfaces that will later prove difficult or impossible to change.

It is worth noting that the study assumes a European perspective and that GDPR applies. Full details of the survey and analysis are provided as supplemental material and archived at https://osf.io/4vu67.

Seymour, W., Coté, M., & Such, J. (2023). Legal Obligation and Ethical Best Practice: Towards Meaningful Verbal Consent for Voice Assistants. In 2023 CHI Conference on Human Factors in Computing Systems. https://doi.org/10.1145/3544548.3580967

William Seymour, Mark Cote, and Jose Such (2022). Can you meaningfully consent in eight seconds? Identifying Ethical Issues with Verbal Consent for Voice Assistants. In CUI 2022 - 4th Conference on Conversational User Interfaces(CUI ’22). Association for Computing Machinery, New York, NY, USA, 4 pages. https://doi.org/10.1145/3543829.3544521

Connect with us on LinkedIn and Twitter

Our podcast Always Listening

If you would like to share any comments, or just to get in contact with the SAIS team email: sais-comms@kcl.ac.uk

SAIS is a cross-disciplinary collaboration between the departments of Informatics, Digital Humanities and The Policy Institute at King’s College London, and the Department of Computing at Imperial College London, working with non-academic partners: Microsoft, Humley, Hospify, Mycroft, policy and regulation experts, and the general public, including non-technical users.

William Seymour OUTREACH

outreach